How to build a secure, automated AI data ingest workflow using MASV and integrated data orchestration and storage tools

The AI business is as data intensive as it gets. AI systems require mountains of data for generative model training, fine-tuning, edge case learning, and context/retrieval for retrieval augmented generation (RAG) use cases.

Unsurprisingly, then, one of the biggest challenges facing AI companies and researchers is the data ingestion process, including cost-effectively storing, transporting, and securing all that data while improving time to market and staying compliant with data protection regulations.

That’s why this guide illustrates how to create a secure AI data ingest workflow using automated systems. After all, an AI pipeline is only as good as its weakest link – and that link is often the data ingest workflow.

Table of Contents

- What Is a Secure AI Data Ingest Workflow?

- Common Challenges in Scaling AI Data Ingest

- The Secure AI Data Ingest Workflow

- Automating and Scaling the Workflow

- The MASV Advantage for Petabyte-Scale Data Ingest

- Real‑World Application: MASV in an AI Pipeline

- Building a Secure AI Data Ingest Workflow With MASV

What Is a Secure AI Data Ingestion Workflow?

Large-scale data transfer for AI involves collecting data for model training and other purposes through a secure workflow involving encrypted channels, validated inputs, access controls, and secure storage. Regular audits and monitoring ensure integrity and security throughout the data lifecycle.

Secure AI data ingest prioritizes raw data integrity, compliance, and anomaly detection to safeguard sensitive information and prevent data poisoning through data governance, encryption, validation, and lineage:

- Data governance enforces policies, roles, and regulatory rules to control data access and usage.

- Data encryption protects data in transit and at rest.

- Data validation ensures data accuracy, integrity, and trust before processing.

- Data lineage tracks data origins and transformations for transparency and auditability.

A high-performing secure ingest workflow must also include automated file movement using the best data ingestion tools, anomaly detection, data anonymization, and compliance checks.

Common Challenges in Scaling AI Data Ingest

However, scaling an AI model training data pipeline – so AI companies can keep data secure while developing models more efficiently for faster time to market – remains a massive challenge thanks to:

- Network strain: Petabyte-scale unstructured data ingestion can easily strain or even overwhelm networks, storage, and pipelines, creating serious throughput and latency bottlenecks.

- File sharing problems: Not all data ingestion tools for AI are created equal. UDP file transfer solutions like Signiant and Aspera are difficult and time-consuming for contributors to set up and use; consumer-grade file sharing like WeTransfer and Dropbox can’t reliably handle large file volumes.

- Security overhead: Managing network keys, user accounts, access controls, and firewall rules for a UDP-based file transfer platform introduces significant security overhead because of the need for continuous key rotation, strict access auditing, and careful firewall configuration.

- Risk of malicious or bad data inputs: Large, distributed enterprise data pipelines increase the risk of unverified or malicious data entering the system, and require strong authentication and source validation.

- Risk of non-compliance: Enforcing consistency, privacy controls, and regulatory compliance with data protection standards like SOC 2 Type II and ISO 27001 becomes increasingly complex.

This shows why it’s important to automate data ingestion for machine learning models: A secure, automated data ingest and transfer platform can be the missing link that helps accelerate and secure relevant data movement, for faster model training and development.

Automate secure AI data ingest

Connect MASV with data orchestrators and cloud storage for an end-to-end automated and secure data ingest pipeline.

The Secure AI Data Ingest Workflow

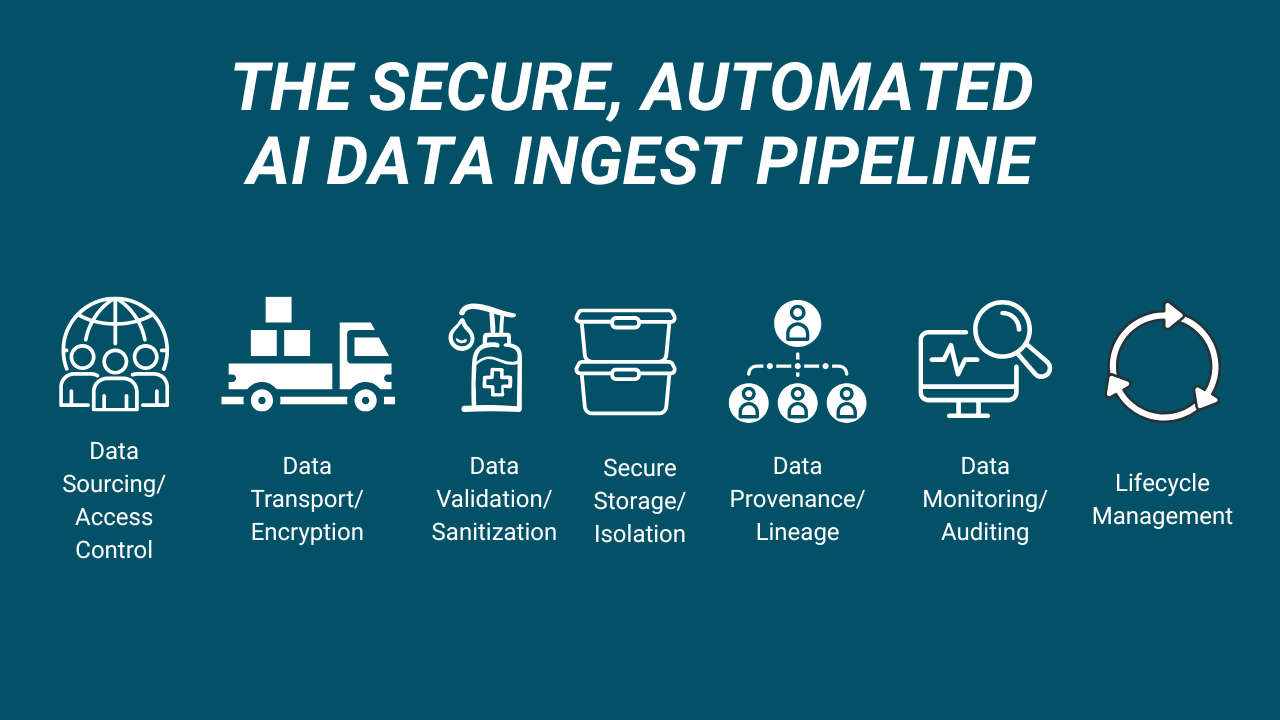

Achieving security automation and preventing data breaches in AI workflows requires several steps, from data sourcing/access control to continuous monitoring, auditing, and lifecycle management. Many of these data security steps can be performed concurrently, as long as this secure workflow is set up before data begins being ingested.

Here’s a breakdown of each step, along with how a secure and automated workflow using an upload tool like MASV can help.

Table: The secure, automated AI data ingest workflow

| Element | What's Involved | How Automated & Secure Data Ingest Helps |

|---|---|---|

| Data sourcing/access control | Identifying/authorizing trusted data sources, enforcing IAM | Secure upload Portals, REST API keys/JSON Web Tokens, MFA and SSO, password protection, file expiry and download limits, built-in consent management tools |

| Data transport/encryption | Encrypting in-transit data, automating data transfer | Minimum TLS 1.2 encryption on in-transit transfers; automated accelerated transfer tools |

| Validation/sanitization | Automatic detection of malformed data | Pre‑transfer validation via Upload Rules (file naming conventions and restricted file extensions), auto malware scanning and checksum verification, integrates with data orchestration tools |

| Secure storage/isolation | Encrypting at-rest data, central key management, segmented environments | AES-256 encryption on at-rest data, direct‑to‑cloud bucket delivery through a centralized and secure uploader, centralized API key management in MASV Web App, private accelerated global network, zero-trust architecture that prevents lateral movement |

| Provenance/lineage | Logging of data origin and transformation, metadata tracking | Transfer History Log/Package Activity Feed for audit trails; metadata around file source, destination, timestamps, and user identity; webhook event logs |

| Monitoring/auditing | Continuous logging, access tracking, and compliance status | Detailed event logs and transfer metadata can be integrated with SIEM tools through APIs and webhooks; ability to export full transfer logs |

| Lifecycle management | Rules applied around data retention and secure deletion | Transfer auto‑expiry after five days; programmable data retention policies through custom transfer expiry dates; permanent and unrecoverable data deletion once data retention period has passed |

1. Data sourcing and access control

- What’s required: Identify and authorize trusted data sources for high quality data, and enforce identity and access management (IAM) across multiple systems for any collaborators to prevent rogue or malicious inputs.

- How automated & secure data ingest helps: MASV uses secure upload portals for web-based upload, while its REST API requires an API key or JSON Web Token (JWT) to authorize requests. IAM tools such as multi-factor authentication (MFA) and single sign-on (SSO) with SAML-based authentication prevent unauthorized account access when ingesting data. Password protection on transfers, automatic file expiry, and download limits further restrict unauthorized file access.

2. Data transport and encryption

- What’s required: Ensure all in-flight data is encrypted, both to protect against interception, tampering, or eavesdropping as it travels between systems, and to stay in compliance with data protection standards. Automating data transfer.

- How automated & secure data ingest helps: MASV uses TLS 1.2 encryption on all in-transit transfers combined with file transfer automation tools such as Watch Folders and MASV API for hands-free ingest workflows.

3. Validation and sanitization

- What’s required: AI pipelines ingesting large amounts of data at maximum velocity require automatic detection and exclusion of malformed or poisonous data to protect data quality and model integrity. Malformed data can break processing or cause errors, while poisonous data can cause models to become biased or corrupted.

- How automated & secure data ingest helps: MASV Upload Rules allow account owners to set custom file delivery specifications, such as enforcing file naming conventions, maximum file sizes, and restricting specific file extensions (such as .exe files). Automatic malware scanning on all uploads and checksum verification on transferred data ensures clean data that arrives uncorrupted.

- MASV can also be integrated with popular data orchestration platforms to automate data validation tasks.

4. Secure storage and isolation

- What’s required: All at-rest data residing in storage systems must be encrypted at all times. APIs require centralized key management, and the transfer platform should consist of segmented environments to minimize the risk of data leakage or unauthorized lateral movement.

- How automated & secure data ingest helps: MASV employs AES-256 encryption on all at-rest data, and its centralized and secure web uploader (MASV Portals) ensures a single entry point into shared storage for added security. Centralized key management is provided in the MASV Web App, and MASV users a private accelerated network with a “zero trust” AWS backbone that prevents an attacker’s lateral movement in the system.

5. Provenance and lineage

- What’s required: Logging of data origin and transformation and metadata tracking is crucial to demonstrate where data originated, how it’s been transformed, and who has accessed it, improving trustworthiness and supporting compliance.

- How automated & secure data ingest helps: The MASV Transfer History Log, Package Activity Feed, and webhook event logs record key events in a file’s lifecycle, from upload to download and deletion, providing a verifiable audit trail. MASV creates and maintains metadata around file source, destination, timestamps, and user identity.

6. Monitoring and auditing

- What’s required: Continuous logging and access tracking provide real‑time visibility into data activity. This helps detect anomalies, unauthorized access, and data drift to ensure data integrity and security.

- How automated & secure data ingest helps: Detailed event logs and billing history can be exported as a CSV file. This information can also be integrated with security information and event management (SIEM) tools such as Splunk, Sumo Logic, or Azure Sentinel through APIs and webhooks, helping to centralize monitoring and detect anomalies.

7. Lifecycle management

- What’s required: Rules must be applied around data retention and secure deletion to ensure compliance, privacy, and efficiency. These rules limit how long sensitive data is stored, reducing exposure risk, while secure deletion prevents the recovery of obsolete data.

- How automated & secure data ingest helps: Lifecycle management tools such as the auto‑expiry of transferred files after five days (unless account owners specify otherwise), custom transfer expiry dates, and unrecoverable data deletion once files have expired.

Shatter the AI Ingest Bottleneck

Connect MASV with data orchestrators and cloud storage for an end-to-end automated and secure data ingest pipeline.

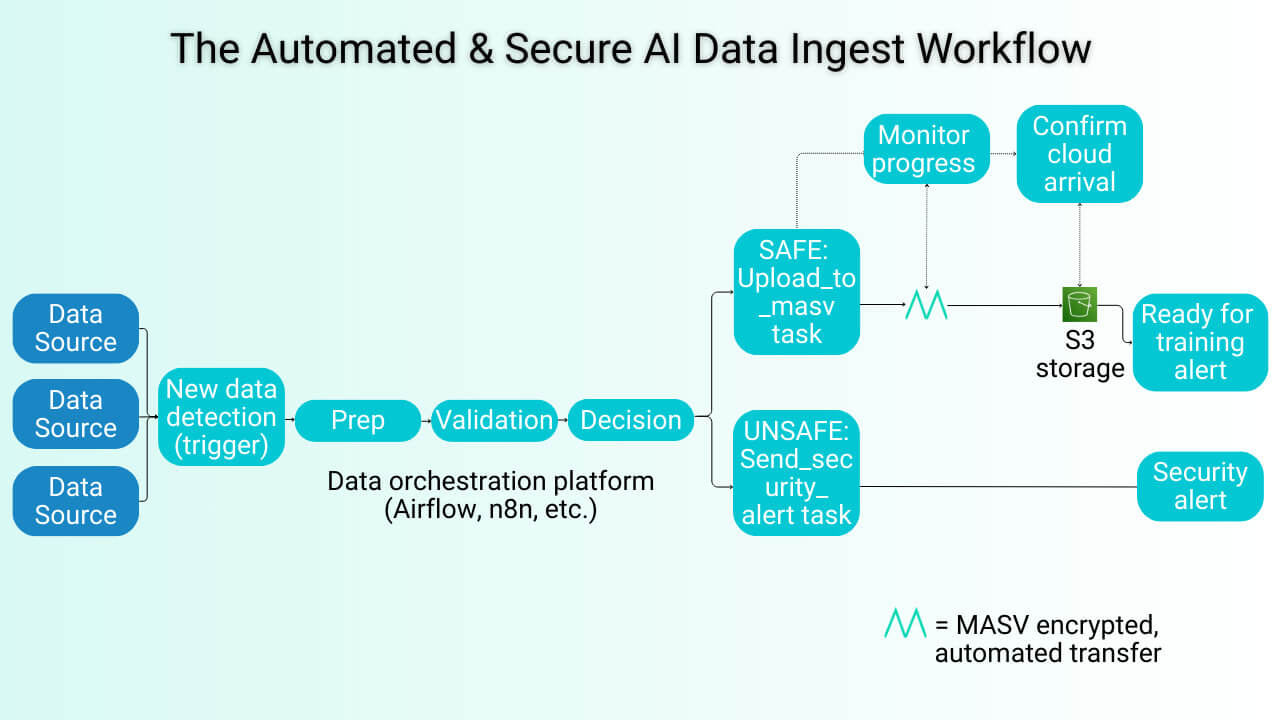

Automating and Scaling the Workflow

Workflows involving petabytes of data can be made secure by following the steps above, but will quickly become unmanageable without automation. That’s why a sustainable AI data ingest process must self‑manage events such as new data upload, validation, retries, and notifications.

This is where MASV’s automation ecosystem shines.

MASV’s out-of-the-box automation tools for intelligent transfer automation include Watch Folders, the MASV API, or MASV Agent, allowing anyone – from casual users to data engineers – to quickly configure automated data upload pipelines. Workflows can be configured to allow third-party collaborators to drag-and-drop unstructured data for upload using MASV Portals, which can be configured to automatically ingest that data to one or multiple storage locations simultaneously.

The flexibility of the MASV platform also allows native integrations with popular data orchestration and MLOps data pipeline automation platforms such as Apache Airflow, Kubeflow Pipelines, Jenkins, n8n, Make.com, or Node-RED to facilitate continuous delivery (CD) data pipelines.

Data engineers can use these integrations to initiate, monitor, and validate massive transfers as part of a unified pipeline. Here’s an example of how the Apache Airflow orchestration platform can work with MASV to automate an AI data pipeline:

Table: AI data ingest orchestration using Apache Airflow data orchestration with MASV

| Pipeline Stage | Airflow Operator | Action |

|---|---|---|

| 1. Detection (trigger) | Sensor (e.g., FileSensor) | Starts the directed acyclic graph (DAG): Watches a specific local folder or staging area; when a new dataset file appears (stabilizes), it triggers the workflow to begin. |

| 2. Preparation | BashOperator | Standardizes the data: Runs a shell command (e.g., tar -czf) to compress the raw folder into a single archive or move it to a locked processing directory. |

| 3. Validation (security gate) | PythonOperator | Runs a Python function that uses a library like Microsoft Presidio to scan the file for PII; returns a boolean (True/False) or exit code. |

| 4. Decision | BranchPythonOperator | Reads the result of the validation task:

|

| 5. Transfer (Upload) | PythonOperator | Triggers MASV Agent, which only runs if validated; sends a POST request to the MASV API to upload the file to your S3‑linked MASV Portal. |

| 6. Polling | PythonSensor | Monitors progress: Periodically hits the MASV API (GET /packages/{id}) to check status; the task does not mark success until the transfer is 100% complete. |

| 7. Verification | S3KeySensor | Confirms cloud arrival: Checks the specific S3 bucket key to ensure the file has physically arrived and is ready for AI model training. |

| 8. Handoff/Alert | SlackWebhookOperator | Notification: Depending on the branch taken, sends either a “ready for training” message or a security alert to your team. |

How MASV automates the S3 landing

Regardless of which data orchestration tool is used, the workflow also relies on a MASV no-code cloud integration with S3 and automated upload:

- The trigger: Upon verification of good data, the orchestrator uses MASV to upload the dataset to a specific MASV Portal.

- The transfer: MASV accelerates the data transfer over its private network.

- The landing: The MASV Portal is preconfigured using a no-code integration to automatically route all incoming files directly to your Amazon S3 bucket.

- The notification: MASV sends a webhook back to your orchestrator confirming the file is safely in S3, allowing AI training to begin.

Although all automated workflows should be supervised by a human, in the above workflow no specific human intervention is required: Every dataset is automatically uploaded, validated, encrypted, and moved to storage for AI training.

Example: Pseudocode for a MASV data orchestration integration

Here’s what an Airflow DAG code snippet could look like using Python pseudocode, including a critical validation gate using BranchPythonOperator to prevent bad data from reaching the upload stage:

from airflow.sdk import DAG

from airflow.providers.standard.operators.python import PythonOperator, BranchPythonOperator

from airflow.providers.slack.operators.slack_webhook import SlackWebhookOperator

from airflow.providers.standard.sensors.filesystem import FileSensor

import pendulum

import requests

# Configuration

DATASET_PATH = "/data/incoming/dataset.txt"

MASV_AGENT_URL = "http://masv-agent:8080/api/v1"

def scan_for_pii(**kwargs):

"""

Simulates a PII scan. In production, import Presidio here.

Returns 'upload_to_masv' if safe, 'security_alert' if unsafe.

"""

pii_found = False # Set to True to test the failure path

if pii_found:

print("🚨 PII DETECTED! Blocking upload.")

return 'security_alert'

else:

print("✅ Scan passed. Proceeding to upload.")

return 'upload_to_masv'

def trigger_masv_upload(**kwargs):

endpoint = f"{MASV_AGENT_URL}/portals/uploads"

payload = {

"subdomain": "my-s3-portal",

"paths": [DATASET_PATH],

"package_name": "Validated_Training_Data"

}

resp = requests.post(endpoint, json=payload)

resp.raise_for_status()

print(f"Upload started: {resp.json()['id']}")

# DAG Definition

with DAG(

'secure_ai_ingest',

start_date=pendulum.yesterday(),

schedule_interval=None,

catchup=False

) as dag:

# 1. Wait for file to arrive

wait_for_file = FileSensor(

task_id='wait_for_file',

filepath=DATASET_PATH,

poke_interval=30

)

# 2. Validation Gate

validation_gate = BranchPythonOperator(

task_id='validate_data',

python_callable=scan_for_pii

)

# 3a. Success

upload_task = PythonOperator(

task_id='upload_to_masv',

python_callable=trigger_masv_upload

)

# 3b. Failure

alert_task = SlackWebhookOperator(

task_id='security_alert',

http_conn_id='slack_connection',

message="🚨 SECURITY ALERT: PII detected in AI Ingest Pipeline. Upload blocked.",

channel="#security-ops"

)

# 4. Success notification

success_notify = SlackWebhookOperator(

task_id='notify_success',

http_conn_id='slack_connection',

message="✅ Data safely uploaded to S3 via MASV.",

channel="#ai-team"

)

# Wiring

wait_for_file >> validation_gate

validation_gate >> upload_task >> success_notify

validation_gate >> alert_task

The MASV Advantage For Petabyte-Scale Data Ingest

MASV facilitates lightning fast and reliable AI workflows through cloud transfer acceleration, no-code integrations with other platforms, automations, and an enterprise-grade security and compliance posture – helping to reduce time to market for AI companies.

Its scalable, no‑code platform integrates with storage and other tools to ensure smooth and compliant workflows, complemented by MASV’s core file transfer strengths:

- Speed: Multi‑threaded, accelerated transfers for petabyte‑scale data movement

- Security: AES‑256 and TLS 1.2 encryption, IAM and access controls, and a secure cloud platform that eliminates server access and manual patch updates.

- Compliance: SOC 2 Type II, ISO 27001, GDPR, PIPEDA, TPN Gold.

- Automation: API, CLI (MASV Agent), custom webhooks, and Watch Folders for hands‑free automated file transfer workflows.

- Scalability: Seamless handling of any number of massive files of any size and any data volume, and a global accelerated network of 400+ servers.

- Reliability: Relentless automatic retries with checkpoint restart in case of interruption, and checksum validation to ensure data integrity.

Real‑World Application: MASV in an AI Pipeline

MASV recently worked with Troveo, a video licensing platform for generative AI companies who need content for model training, to ingest an average of 6,000 TBs of video per month into Troveo’s Amazon S3 cloud storage bucket using MASV Portals.

- A native integration with S3 helped Troveo ingest petabytes of data to storage each month without manual intervention.

- MASV’s accelerated, private global network provided the speed and reliability Troveo needed to keep its content ingest machine humming at full throttle.

- Free-to-set-up MASV Portals allowed Troveo to generate a Portal per contributor to keep content organized.

- A drag-and-drop interface allowed users of various technical backgrounds to easily upload content without Troveo having to provide technical help to contributors around the world.

Building a Secure AI Data Ingest Workflow With MASV

Building AI models isn’t easy, but companies have discovered that building a secure AI data ingest workflow can be even more of a bottleneck – especially when you don’t have the right tools. That’s why building a secure, automated AI data ingest pipeline is a must to keep development moving forward and to reduce time to market in a fast-paced environment.

But building such a pipeline doesn’t happen overnight, either. It requires careful consideration and the application of several elements:

- Data sourcing and access control

- Transport and encryption

- Validation and sanitization

- Secure storage

- Data provenance and lineage

- Monitoring and auditing

- Lifecycle management

MASV’s speed, reliability, and simplicity can help expedite the process. And its no-code integrations with cloud and connected on-prem storage combined with API tools to integrate with data orchestration platforms makes MASV for AI pipelines an obvious choice.

Get in touch with the MASV team to schedule a discovery call or interactive demo to learn more about how MASV can help automate your AI data ingest pipeline.

Shatter the AI Ingest Bottleneck

Ingest mountains of data faster and more reliably with MASV for AI workflows.