An archive—hosted in the cloud or elsewhere—is a little like a storage backup. Unlike a backup, however, an archive is for data that’s stored long term and infrequently accessed.

But what exactly is a cloud archive, how is it different from traditional methods to archive data, and what are some of the top cloud archive best practices you should follow? We’ve got the answers for you. Let’s get it.

Table of Contents

Big Data Ingest for Cloud Archive

Ingest massive amounts of data into multiple cloud archive solutions like Amazon S3 Glacier from a centralized location.

Why Archive Media Or Other Data?

Video and post-production houses often use data archiving to free up storage space and ensure they still have access to the files in case they need them (during an information audit, or if a client asks for footage from three years ago, or disaster recovery, for example).

Archive storage is also known as cold data storage. For video editors and other post professionals, keeping data in cold storage helps free up space in faster, more responsive “hot” storage such as RAID arrays or network attached storage (NAS) devices.

Archives often have strict rules around who can store and access data due to security concerns and because egress from cold storage is expensive and time-consuming.

What is a Cloud Archive?

A cloud archive is exactly what it sounds like—it’s an archive hosted in the cloud, typically via a storage-as-a-service public cloud such as Amazon S3 object storage or Google Cloud Storage. Cloud archiving has been popular ever since companies figured out they can use the cloud to:

- Store archived data more cost-effectively (and with far less maintenance and worry than keeping archived data in-house) at any scale; any archiving solution typically offers practically unlimited scale and cold data storage for just pennies per GB.

- Avoid spending massive CapEx through buying and upgrading expensive on-prem equipment (and OpEx on maintaining, updating, and patching that equipment).

Before the cloud, most media houses used Linear Tape-Open (LTO), a type of magnetic tape, to store archived data.

Cloud archive vs. cloud backup

A cloud archive and cloud backup may sound the same, but they’re not:

- A backup has fresh data copied to it at regularly scheduled intervals, is kept relatively accessible in case data needs to be recovered, and often involves changes in the data as production data evolves in real-time. It’s usually kept on-site (if kept in physical storage) or in easily accessed cloud storage, and isn’t kept indefinitely.

- An archive moves data off-site once and keeps it in a safe location indefinitely. The data isn’t changed or augmented. It’s often a time-consuming process to egress data from archives.

Cloud archive vs. tape archive

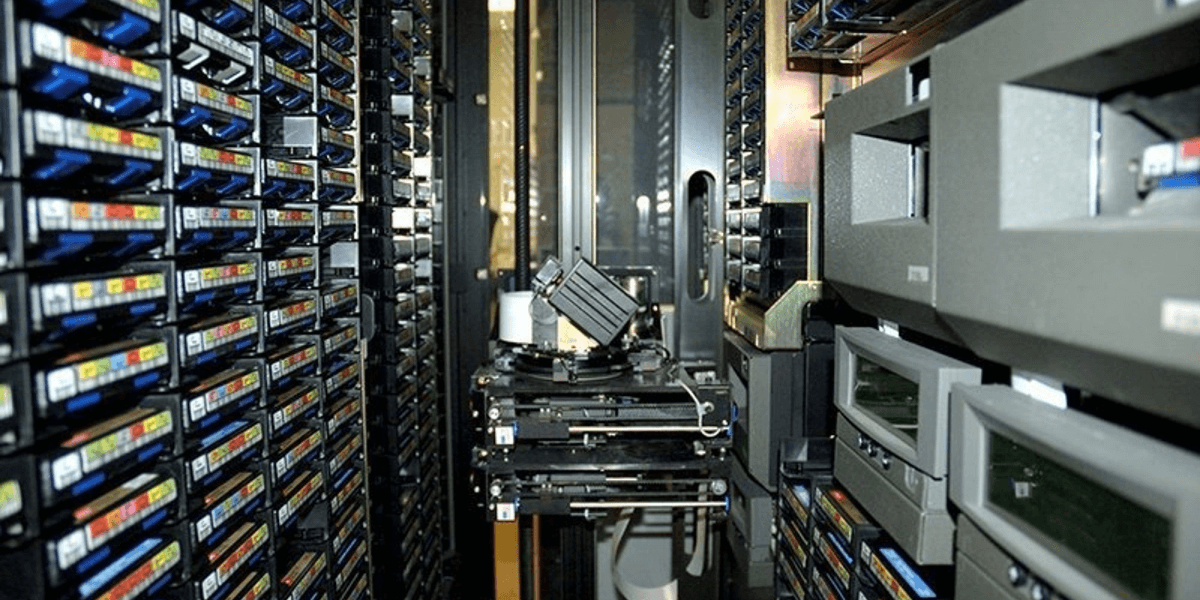

LTO tape has been used for years and is the traditional go-to of any media archivist. LTO tape archives typically hold data on magnetic tape kept in cartridges, which are then stored in a safe location (typically offsite).

- Tape libraries often use robots to store and retrieve cartridges, which can number in the thousands.

- Because of the limited nature of tape, tape libraries keep expanding as more data is added (although it’s worth noting that LTO-7 tape can hold up to 6TB of data).

- Tape libraries are often organized via file systems such as the Linear Tape File System (LTFS).

LTO tape is often used for archiving because tapes are durable, have a long shelf life (up to 30 years), and are cheaper than keeping archived data on disks. The downside of tape libraries is that tapes require specific storage conditions of a consistent temperature of around 70 degrees F with 40 percent relative humidity.

SOURCE: TechTarget

Because of the slow egress associated with some cloud cold storage, it can even be faster to get data out of a tape library than cloud storage.

But LTO tape has generally fallen out of favor when compared to cloud archive options, which are often less expensive with better security, search functionality, redundancy, uptime, and convenience.

Centralize Data Ingest With MASV

MASV acts as a single entry point to any connected storage destination, from hottest to coldest, on-prem or in the cloud.

Challenges Around Cloud Archive (and How to Solve Them)

Implementing and managing a cloud archive isn’t without its challenges—although some of these challenges often stem from a lack of in-house cloud expertise, which can in turn lead to misconfigurations.

And misconfigurations can then lead to higher costs, lower performance, and a lack of trust around the efficiency of ingesting content into a cloud data archive.

How do cloud misconfigurations happen?

One of the main benefits of cloud storage in general is its virtually unlimited scale in capacity—but the price of unlimited scale is that it’s very easy to shoot yourself in the foot. With that in mind, getting a cloud archive implementation right requires plenty of planning and strategizing.

Unfortunately, many companies skip the planning stage and dive right in.

“(Broadcasters new to cloud) will often experiment and try things themselves initially, but they usually get burned by cost after leaving an EC2 instance on over the weekend, for example,” said Nick Soper, cloud product manager at media cloud consultancy Tyrell, in an interview with MASV.

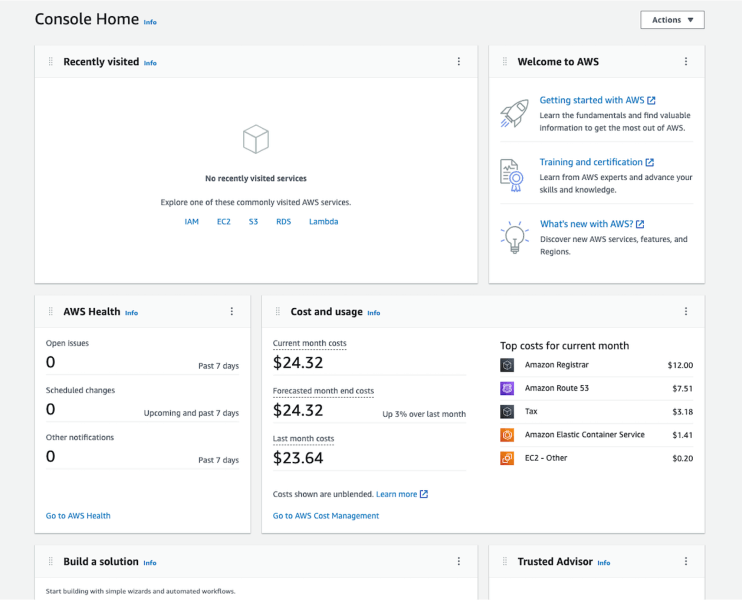

After all, it’s somewhat easy to be lulled into a false sense of security by the shiny management consoles deployed by a cloud archiving service (our advice: Don’t use the console, except in limited circumstances. But more on this later).

SOURCE: Amazon

💡 Read more: Best Practices For Securing Your Storage Connection

Challenge 1: Storage cost

Some cloud misconfigurations, like leaving an Amazon S3 object storage bucket accessible by the public (known as a “leaky bucket”), can lead to major cybersecurity and data breach risks.

But other misconfigurations can lead to inefficient workflows, escalating costs, and the misconception that cloud archive is more expensive.

Just one misconfiguration can cause massive spikes in cloud costs—for example, by not locking down archival storage workflows to a specific bucket or path, and allowing business users to ingest current data into a cloud archive.

Cold cloud storage is generally cheaper than hot storage options. But saving current data in archival storage can lead to very high egress fees if (when?) you need to access that data.

For Amazon S3, for example, that means big cost differences depending on the storage class:

| Storage type | Storage cost (per GB) |

| S3 Standard | $0.021-$0.023 |

| S3 Standard Infrequent Access | $0.0125 |

| S3 Glacier Instant Retrieval | $0.004 |

| S3 Glacier Flexible Retrieval | $0.0036 |

| S3 Glacier Deep Archive | $0.00099 |

As shown above, storage costs per GB decrease as you get into colder and colder storage options.

Great! That means you should just store all our data in cold storage, right?

Wrong—the cost of data egress and retrieval rises with colder storage:

| Storage type | Data retrieval requests (per 1,000 requests) | Data retrievals (per GB) |

| S3 Standard | N/A | N/A |

| S3 Standard Infrequent Access | N/A | $0.01 |

| S3 Glacier Instant Retrieval | N/A | $0.03 |

| S3 Glacier Flexible Retrieval | $0.05-$10 | $0.01-$0.03 |

| S3 Glacier Deep Archive | $0.025-$0.10 | $0.0025-$0.02 |

The lesson here: Don’t misconfigure your cloud storage so users ingest current data into your cloud archive, or cold data into hot storage (which can also get expensive).

Indeed, ingesting archive data into hot storage can also get expensive (although providers like Amazon typically just charge by the hour, so as long as you catch it early and move it to your data archive, the storage costs shouldn’t balloon too much).

There’s also the hidden costs of traditional archive storage to think about: Housing the tapes, classifying them, and managing the costs of a physical space can also add up.

Challenge 2: Performance

Misconfigurations can also lead to a distorted view of cloud’s utility around performance, especially—similar to our cost example above—if users save current data to cold storage and need to get it out again.

It can take a while to even get things started when requesting data from cold storage: You first have to wait for the data retrieval request to process, which usually takes hours.

Then the archive needs to retrieve the data—which literally could take days.

Similar to costs, the turnaround time for data retrieval from cold storage largely depends on the level of cold storage:

| Storage type | Data retrieval turnaround time |

| S3 Standard | Milliseconds |

| S3 Glacier Instant Retrieval | Milliseconds |

| S3 Glacier Flexible Retrieval | 1-5 minutes (expedited), 3-5 hours (batch), 5-12 hours (bulk) |

| S3 Glacier Deep Archive | 12-48 hours |

Even though cloud providers like Amazon can be extremely forgiving when you make a mistake, let’s just say you don’t want to have crucial business data you need right now stuck in Glacier Deep Archive.

💡 Just what is an acceptable turnaround time for data retrieval depends on what kind of data and your use case. In some cases, flexible retrieval options may be best.

Challenge 3: The management console/portal

We’re just gonna say this right now: If you’re using a management console or portal such as AWS Management Console, Azure Portal, or Google Cloud Console to manage your cloud services, you’re probably doing it wrong.

Management consoles for any big public cloud provider usually look good and are designed to be easy for a new user to acclimatize to: All you do in many cases is tick a few boxes and you’ve configured your system.

- Problem is, ticking those boxes is really easy—and ticking the wrong one can have cascading negative effects that may not be obvious at first, but can create a tremendous amount of problems as time passes.

- Many consoles can be confusing and have inconsistent rules between modules, making it next to impossible to fully understand the effect a certain change will have on the rest of the system.

- It’s also very difficult for your collaborators, in case of a misconfiguration, to see what was done.

For these reasons we recommend not using the management consoles of public cloud providers to deploy to production. The consoles may be a good starting point, and can be helpful but don’t set you up for success in the long term.

It’s far less dangerous and more effective to deploy using infrastructure as code (IaC) or using a command-line tool. There are several advantages to this approach:

- There’s a review process, where any change you want to make is visible to other team members. You can enforce review rules making it impossible to deploy a change without someone else reviewing it first.

- You can also set rules around performance and security, such as not allowing an S3 bucket to be set as public. Automated scanning tools where you can set rules – ie. not allowed to set an S3 bucket as public.

Using IaC or command line to set up and manage your cloud architecture can take a lot of configuration, time, and expense to set up. But the upside is that you end up saving a lot in cost, vulnerabilities, and damage from needless mistakes.

Implementing and Managing a Cloud Archive: Best Practices

There are two main things you must keep in mind when implementing a cloud archive:

- Make sure to configure your storage ingest pathways so the right people or applications have access to the right storage.

- Make sure to properly classify your data so any data ingested automatically goes into the right storage bucket.

Here are some other tips for setting up your cloud archive:

- Defer to hot storage: As a rule, consider storing all data in hot storage first.

- Automate data to cold storage: Set up an automated system that eventually re-classifies and moves that data into cold storage after a set period of time (you can purchase a turnkey solution or build your own rules around this). This process alone makes it much more difficult to make an expensive mistake. You can use a data upload/file transfer tool that integrates with cloud storage, then configure your rules around archive within the cloud storage platform (ie. archive unaccessed data after X days).

- Use the console for PoC’s only: Use the management console for proofs of concept only in a sandbox account (very carefully—make sure to monitor your costs on that account). Once the PoC is finalized, deploy into production using IaC or command line. If you do use the web console in production and make a mistake, you’ll need to remember everything you clicked so you can replicate the issue. Command line tools prevent the above scenario because if you enter the wrong commands, the job simply won’t work.

- Be stingy with privileged access: IT administrators should only give privileged storage access to business users or functions who need to save data to archive.

Effortlessly Ingest Data To Cloud Archive With MASV

Setting up and managing a cloud archive along with your other cloud storage can be a lot of work and requires a great deal of time investment on the front end.

But all that time and effort is worth it, because it means you’ve systematically thought your data archiving process through—which, in turn, means fewer misconfigurations and costly errors.

On the other hand, managing several storage destinations and shared access—from hot storage to archive—can quickly turn into an administrative nightmare for IT teams who must manage multiple storage platforms and user permissions, all while handling system security. But MASV Centralized Ingest can help simplify the data ingest process across all your cloud storage while improving security.

Centralized ingest is a hub to connect, manage, and automate data ingestion into storage without having to manage multiple users and permissions at the storage platform level.

IT admins can easily connect shared storage—like a cloud archive—through MASV‘s browser interface, then configure ingest access within a centralized, secure, and automated gateway to all your cloud storage (including infrequent access storage classes, like S3 Glacier). Project teams can then use MASV to automate media into shared storage using a user-friendly upload Portal, enhancing productivity and simplifying administration.

Sign up for MASV for free today.

Automate Your Backup & Archive Storage Workflow

MASV integrates with dozens of cloud platforms and lets you automate the storage of large media.